Study Challenges 'Digital Dementia' Hypothesis, Suggesting Technology May Protect Aging Brains

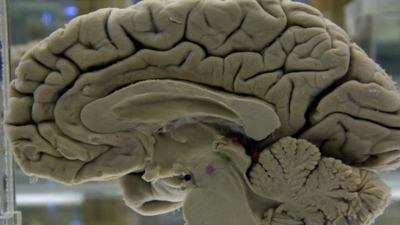

AP File Photo

What is 'digital dementia'?

Why is this new study important?

A reduced risk of cognitive decline

A question of 'how' we use technology

A rapidly changing digital world

SYDNEY: The 21st century has witnessed a significant transformation in how we live and interact with the world, primarily due to the advancements in digital technology. Among these developments, generative artificial intelligence (AI) stands out as a groundbreaking arrival, with tools like chatbots reshaping our learning processes and raising complex philosophical and legal dilemmas regarding the implications of 'outsourcing our thinking.' Nevertheless, the trend toward a more digital lifestyle is not a new phenomenon. The shift from analogue to digital technologies can be traced back to the 1960s, marking the beginning of what is now commonly referred to as the 'digital revolution,' which ultimately paved the way for the internet. As we witness this evolution, an entire generation of individuals who have experienced these changes firsthand is now entering their 80s. This raises an important question: what insights can we glean from their experiences concerning the impact of technology on the aging brain?

A comprehensive new study conducted by researchers at the University of Texas and Baylor University in the United States provides significant insights into this matter. Published today in the esteemed journal Nature Human Behaviour, the research found no supporting evidence for the so-called 'digital dementia' hypothesis. In fact, the study revealed that the utilization of computers, smartphones, and the internet by individuals over the age of 50 may actually correlate with lower rates of cognitive decline.

Much has been documented concerning the perceived detrimental effects of technology on cognitive function. The 'digital dementia' hypothesis was first introduced by German neuroscientist and psychiatrist Manfred Spitzer in 2012. This theory posits that increased reliance on digital devices has led to a weakening of our cognitive abilities due to over-dependence on technology. Spitzer highlighted three primary concerns associated with technology use: first, an increase in passive screen timeactivities that require minimal cognitive engagement, such as binge-watching television shows or mindlessly scrolling through social media feeds; second, the tendency to offload cognitive tasks to technology, evidenced by the common practice of storing contact information on devices rather than memorizing phone numbers; and third, heightened vulnerability to distractions.

While it is well-established that technology can influence brain development, its effects on cognitive aging remain less understood. The recent study led by neuropsychologists Jared Benge and Michael Scullin is crucial because it investigates the effects of technology on older adults who have witnessed significant changes in their interaction with technology throughout their lives.

The researchers employed a methodology known as meta-analysis, wherein they synthesized data from numerous previous studies. They focused on research involving technology use among individuals aged over 50, examining its association with cognitive decline and dementia. In total, they reviewed 57 studies, encompassing data from over 411,000 adults. The studies in question measured cognitive decline through cognitive test performance or formal dementia diagnoses. What they discovered was quite encouraging: greater technology use was linked to a reduced risk of cognitive decline. Statistical analyses were conducted to compute the 'odds' of experiencing cognitive decline based on technology exposure, yielding an odds ratio of less than 1, which signifies reduced risk. Specifically, the study reported a combined odds ratio of 0.42, indicating that higher technology use is associated with a remarkable 58% lower risk of cognitive decline.

This positive correlation remained evident even after accounting for other factors known to contribute to cognitive decline, such as socioeconomic status and various health conditions. Interestingly, the magnitude of the beneficial effect of technology use on cognitive function observed in this study was comparable to or even exceeded that of other established protective factors. For instance, physical activity was associated with roughly a 35% risk reduction, while maintaining healthy blood pressure yielded about a 13% risk reduction.

It is essential to note that while the findings of this study are promising, they should be interpreted with caution. There exists a wealth of research accumulated over many years examining the advantages of blood pressure management and physical activity, with well-understood mechanisms elucidating their protective effects on the brain. In contrast, measuring technology use presents unique challenges. The study acknowledged these complexities by focusing on specific aspects of technology usage while deliberately excluding other forms, such as brain-training games.

The implications of these findings are uplifting. However, it is still premature to assert that technology use directly causes improved cognitive function. Further exploration is necessary to determine whether these findings can be replicated across diverse populations, especially in low and middle-income countries that were underrepresented in the current research, and to uncover the underlying reasons for such associations.

In contemporary society, it is nearly impossible to navigate daily life without some form of technology. From managing financial transactions to planning vacations, much of our lives are conducted online. Perhaps it would be more beneficial for us to reconsider how we engage with technology. Activities that stimulate cognitive functionsuch as reading, learning a new language, or playing a musical instrumentespecially during early adulthood, can bolster brain health as we age. It has been suggested that sustained engagement with technology throughout our lives may serve as a means of enhancing our memory and cognitive abilities, as we adapt to software updates or familiarize ourselves with new devices. This concept of a 'technological reserve' could be advantageous for our brains.

Moreover, technology can facilitate social connections and promote independence in our lives for a more extended period. While the findings from this study indicate that not all forms of digital technology are detrimental, the nature of our interactions and reliance on technology is evolving rapidly. The impact of artificial intelligence on cognitive aging will likely become clearer over the upcoming decades. Nonetheless, our historical ability to adapt to technological advancements and the potential for these innovations to enhance cognitive function suggests that the future may hold promise rather than pitfalls.

For instance, advancements in brain-computer interfaces offer new hope for individuals grappling with neurological diseases or disabilities. Nevertheless, we must remain vigilant regarding the real potential downsides of technology, particularly for younger generations, who may experience adverse effects on mental health. Continued research is crucial to striking a balance between harnessing the benefits of technology while mitigating its potential harms.