The Cognitive Cost of AI: Are We Losing Our Intelligence?

Imagine being a child in 1941, preparing for the common entrance exam for public schools with only a pencil and paper in hand. You encounter a prompt asking you to write, for no more than a quarter of an hour, about a British author. For many today, answering such a question wouldn't take 15 minutes; instead, a quick query to AI technologies like Google Gemini, ChatGPT, or Siri would provide an instant response. This reliance on artificial intelligence to replace cognitive effort has become second nature. However, as evidence mounts suggesting that human intelligence may be on the decline, experts express concerns that this trend is being exacerbated by our increasing reliance on technology.

Historically, the rise of new technologies has often been met with apprehension. Studies indicate that mobile phones distract us, social media erodes our attention spans, and reliance on GPS has diminished our navigational skills. Now, with AI serving as a co-pilot, it takes over cognitively demanding tasksfrom managing finances to offering therapy and even shaping our thought processes.

So, where does this leave the human brain? Are we liberated to pursue more meaningful activities, or are we allowing our cognitive abilities to atrophy as we delegate our thinking to algorithms?

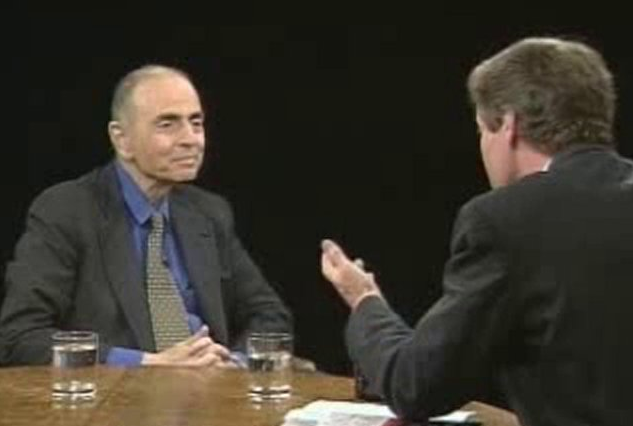

Psychologist Robert Sternberg from Cornell University, recognized for his influential work on intelligence, cautions that the greatest worry in these times of generative AI is not that it may compromise human creativity or intelligence, but that it already has.

The hypothesis that we are becoming less intelligent is supported by various studies. One of the most notable is linked to the Flynn effect, which observed rising IQ scores in successive generations globally since the 1930s, thought to stem from environmental rather than genetic factors. However, recent decades have seen a slowdown, if not a reversal, of this trend.

In the UK, the late James Flynn himself documented a decrease of more than two points in the average IQ of 14-year-olds between 1980 and 2008. Furthermore, large-scale assessments like the Programme for International Student Assessment (PISA) have reported alarming reductions in math, reading, and science scores across numerous regions. Young people today are also exhibiting shorter attention spans and diminished critical thinking abilities.

Yet, while these trends present robust empirical data, their interpretations are complex. Elizabeth Dworak, a researcher at Northwestern University Feinberg School of Medicine, asserts, Everyone wants to point the finger at AI as the boogeyman, but that should be avoided. Dworak recently identified signs of a reversal in the Flynn effect in a significant sample of the U.S. population tested between 2006 and 2018, emphasizing the multifaceted nature of intelligence.

Intelligence, she argues, is influenced by numerous factors including micronutrients like iodine that are crucial for brain development, prenatal care, educational duration, pollution, pandemics, and technology. Isolating the impact of a single factor, such as AI, proves to be a complex challenge. We dont act in a vacuum, and we cant point to one thing and say, Thats it, Dworak explains.

While quantifying AIs impact on overall intelligence remains difficult in the short term, valid concerns arise regarding cognitive offloading and the potential decay of specific cognitive skills. Research indicates that reliance on AI for memory-related tasks could diminish an individual's own memory capacity over time.

Most studies focusing on AIs cognitive impact center around generative AI (GenAI), which enables us to offload more cognitive effort than ever before. Today, virtually anyone with access to a smartphone or computer can quickly retrieve answers, compose essays or code, and produce artistic works in mere moments. Proponents of GenAI often highlight its potential benefits, ranging from increased revenues and job satisfaction to advances in scientific research. A 2023 Goldman Sachs report even projected that GenAI could enhance global GDP by 7% over the next decadea staggering increase of approximately $7 trillion.

However, the apprehension arises from the notion that automating these tasks deprives us of opportunities to hone those very skills, leading to a deterioration of the neural networks that support them. This phenomenon mirrors the deterioration of physical health that results from neglecting physical exercise.

One crucial cognitive skill at risk is critical thinking. Why ponder the merits of a British author when AI tools like ChatGPT can provide a pre-digested answer?

Research conducted by Michael Gerlich at SBS Swiss Business School in Kloten, Switzerland, involving 666 participants in the UK, revealed a significant correlation between frequent AI usage and diminished critical-thinking skills. Notably, younger individuals who heavily relied on AI tools scored lower in critical thinking compared to older adults. Similarly, a study by Microsoft and Carnegie Mellon University surveyed 319 professionals who used GenAI at least weekly. While GenAI boosted their efficiency, it simultaneously stifled critical thinking and fostered an overreliance on technology, which researchers predict could impair their problem-solving abilities in the absence of AI support.

One participant in Gerlichs study voiced a common sentiment: Its great to have all this information at my fingertips, but I sometimes worry that Im not really learning or retaining anything. I rely so much on AI that I dont think Id know how to solve certain problems without it. Indeed, other investigations indicate that using AI systems for memory tasks may contribute to a decline in personal memory capacity.

The erosion of critical thinking ability is further complicated by AI-driven algorithms that shape our social media experience. Gerlich underscores the profound impact of social media on critical thinking, explaining, To get your video seen, you have four seconds to capture someones attention. This leads to an inundation of bite-sized content that is easy to consume yet discourages deeper analysis. It gives you information that you dont have to process any further, Gerlich adds.

By passively receiving information instead of engaging in active cognitive effort, we risk neglecting the critical analysis of what we learn, including its meaning, impact, ethics, and accuracy. Gerlich warns, To be critical of AI is difficultyou have to be disciplined. It is very challenging not to offload your critical thinking to these machines.

Wendy Johnson, who specializes in intelligence studies at Edinburgh University, observes this trend in her students. Although she hasnt conducted empirical tests, she suspects that students increasingly prefer to rely on the internet for answers rather than engaging in independent thought.

Without critical thinking skills, we struggle to consume AI-generated content wisely. Such content may appear credible, especially as we become more dependent on it, but we should remain vigilant. A 2023 study published in Science Advances revealed that compared to human-generated information, GPT-3 not only presents data in a more comprehensible form but is also capable of producing more persuasive disinformation.

The implications of AIs influence extend beyond the realm of intelligence and creativity. Sternberg articulates these worries in a recent essay in the Journal of Intelligence: Generative AI is replicative. It can recombine and re-sort ideas, but it is not clear that it will generate the kinds of paradigm-breaking ideas the world needs to address significant issues such as climate change, pollution, violence, increasing economic disparities, and the rise of authoritarianism.

To maintain and nurture our creative capacities, the manner in which we interact with AI is crucialwhether actively or passively. Research led by Marko Mller from the University of Ulm in Germany highlights a correlation between social media use and enhanced creativity among younger individuals, a trend not observed in older generations. Mllers analysis indicates that those born into the social media era utilize it differently than their older counterparts, with younger users seemingly benefiting from idea-sharing and collaboration, while older users generally consume content more passively.

Moreover, its vital to consider what occurs after engaging with AI tools. Cognitive neuroscientist John Kounios from Drexel University explains that, analogous to other pleasurable experiences, moments of insight trigger a rush in our brain's reward systems. These mental rewards enhance our memory of groundbreaking ideas and can encourage less risk-averse behavior, ultimately fostering further learning and creativity. However, insights generated through AI do not appear to produce a similarly potent effect in the brain. Kounios states, The reward system is an extremely important part of brain development, and we just dont know what the effect of using these technologies will have downstream. Nobodys tested that yet.

Additionally, the long-term implications of our reliance on AI warrant closer examination. Researchers have only recently revealed that learning a second language can delay the onset of dementia by approximately four years. Yet, in numerous countries, there has been a decline in student enrollment in language courses. The convenience of AI-driven translation applications may contribute to this trend, but these tools, as of now, cannot claim to safeguard your future cognitive health.

Sternberg emphasizes the need to shift our perspective: instead of asking what AI can do for us, we should consider what it is doing to us. Until we gain a clearer understanding, Gerlich suggests that the solution lies in training humans to be more human againutilizing critical thinking, intuitionthe very skills that computers cant yet replicate and where we can truly add value.

We cannot rely on major tech companies to guide us in this endeavor, as no developer seeks to hear that their software might simplify a persons quest for answers too much. Gerlich insists, It needs to start in schools. AI is here to stay. We must learn how to engage with it appropriately. If we neglect this responsibility, we risk not only rendering ourselves obsolete but also jeopardizing our cognitive abilities for generations to come.