Meta Launches New AI App, Expanding Beyond Smart Glasses

What You Need to Know

Meta has officially rolled out its new AI application, Meta AI, which is a reimagined version of the previously existing Meta View app. This innovative platform is no longer limited to users of the Ray-Ban Meta smart glasses; instead, it offers a broader audience the opportunity to engage with artificial intelligence through their Facebook or Meta accounts.

With the launch scheduled for April 29, users can now access the Llama 4 model, Meta's most advanced AI, which boasts an impressive 17 billion active parameters. This update not only enhances the functionality of the app but also introduces features such as image generation and more interactive conversational capabilities.

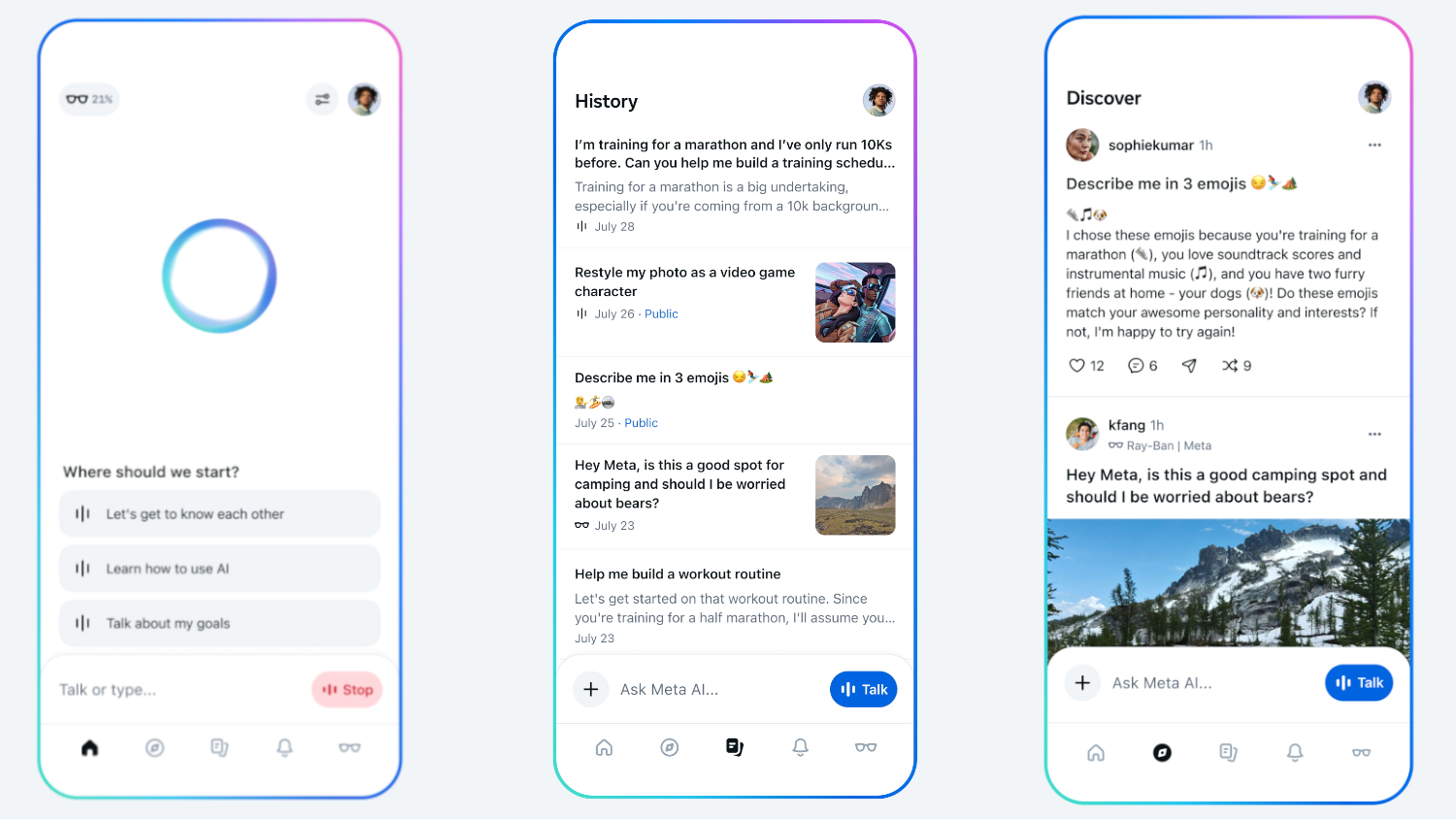

For current Ray-Ban smart glasses owners, the Meta AI application will still serve as a control hub for their devices. However, it now comes with added functionalities that significantly enhance user experience. Features like Live Translation and Live AI discussions will remain intact, but users can now conveniently "pick up where you left off" in conversations through a dedicated History tab on their smartphones.

Previously, the Meta View app was exclusively designed to support the Ray-Ban smart glasses, but the new Meta AI app is a more inclusive platform. It incorporates AI directly into the Home tab, making it accessible to everyone, regardless of whether they own the smart glasses or not.

The new capabilities of the Meta AI app include comprehensive image generation and editing tools. Ray-Ban Meta owners can now capture photographs and use the app to modify them by adding, removing, or altering elements within the image. Those who do not own the glasses can still create and export images for sharing on popular social media platforms, such as Instagram.

The AI model, Llama 4, has been designed to offer more personalized and relevant interactions, with a conversational tone that aims to improve user engagement. In regions like the United States and Canada, Meta AI will now provide tailored responses based on prior queries and user interests, drawing from data collected across other Meta platforms like Facebook and Instagram.

Another exciting addition to the Meta AI app is the Discover feed. This feature allows users to explore and remix popular prompts shared by others, fostering a creative environment while ensuring that private queries remain confidential.

Moreover, Meta has introduced a voice demo that utilizes full-duplex speech technology, which aims to create a more fluid and natural speaking experience. Unlike traditional AI systems that typically operate on a half-duplex systemwaiting for a user to finish speaking before respondingthis new feature allows for a more dynamic conversation style. However, it's important to note that the full-duplex demo, which is currently available only in the United States, Canada, Australia, and New Zealand, doesn't yet have memory capabilities for retaining information from previous interactions.

Meta has reassured existing Ray-Ban Meta smart glasses users that the app will retain all the core features they have come to rely on. Users can expect a seamless transition as their photos and settings migrate to the updated platform without any complications.

Interestingly, despite the app being visible in both the Play Store and App Store, users may find that the current version does not yet reflect all the promised updates, still requiring Ray-Ban glasses for full functionality. However, Meta is actively working on rolling out these changes, and there is considerable anticipation surrounding the potential of the Meta AI app as it enters the competitive landscape, positioning itself against other standalone AI applications like Gemini and ChatGPT.